on October 10, 2013 by Robert Stevens in Under Review, Comments (0)

Nine Variations on two Pictures: What’s in an Arrow Between two Nodes

Overview

When designing an ontology, we often start by drawing some pictures, like the one below. This is a good starting point: it allows us to agree on the main categories and terms we use, and the relations we want to consider between them. How to translate this picture into an OWL ontology may be, however, more tricky than you may think. In this kblog we consider various interpretations of simple “blob and line” diagrams and thus questions you may wish to ask yourself as you use such diagrams.

The Authors

Uli Sattler and Robert Stevens

Information Management and BioHealth Informatics Groups

School of Computer Science

University of Manchester

Oxford Road

Manchester

United Kingdom

M13 9PL

sattler@cs.man.ac.uk and robert.stevens@Manchester.ac.uk

Introduction

When designing or talking about an ontology, we often start by drawing some pictures, like this one:

This is, in many cases, a good thing because we sketch out relevant concepts, coin names for them, identify relevant relations between concepts, and name these as well. If we ignore, for this post, finer details such as the exact shape and form of the diagrams and if we also ignore individuals or objects – i.e., we concentrate on concepts and relationships between them – then we can find two kinds of arrows or edges between them:

- is-a links, i.e., unlabelled edges from a concept to a more general one, e.g., from Mouse to Mammal, and

- relationship links, i.e, labelled edges from a concept to another concept that describe a relationship between these, e.g., Mouse – hasPart → Tail

Now, when we try to agree on such a picture, and then attempt to cast it into OWL, we may find that we are wondering how to exactly “translate” these edges into axioms.

Next, we discuss three possibilities for the first kind of links and six for the second one – basically as a comprehensive catalogue of what the author of such a picture might have had in mind when drawing them. In our experience, the choice as to which of these “readings” is to be applied is often quite dependent on the concepts the edges link – which can cause a lot of misunderstanding if left unresolved.

These are not novel observations; in fact, they go back to the 70ies and 80ies (see, e.g., the works by Woods, Brachman, and Schmolze (DOI:10.1109/MC.1983.1654194) (DOI:10.1207/s15516709cog0902_1) (DOI:10.1016/S0364-0213(85)80014-8)), when people working in knowledge representation and reasoning tried to give precise, logic-based semantics to semantic networks, i.e., to pictures with nodes and edges and labels on them that were used to describe the meaning of and relations between terms.

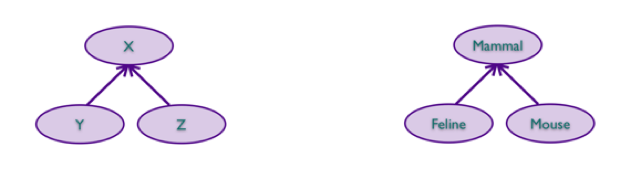

Is-a links between concepts

Let us start with is-a links between concepts, that is, with parts of pictures that look like the following examples:

Of course, we read these as “every Feline is a Mammal” or “every Z is an X“. Thus, when translating the two arrows on the right hand side into OWL, we would add the following two axioms to our ontology:

Feline SubClassOf: Mammal Mouse SubClassOf: Mammal |

In this way, we ensure that every feline is a mammal and that every mouse is a mammal (following the OWL semantics, this is without exception; a discussion of exceptions is outside the scope of this kblog). Looking at the more anonymous picture on the left hand side, we may also wonder whether it means that everything that is an X is also a Y or a Z – which corresponds to covering constraints in other conceptual modelling formalisms such as ER or UMLS diagrams; of course, we know that this isn’t true for the right hand picture because we know of dogs, etc. To capture such a covering constraint, we would add the following axiom to our ontology:

X SubClassOf: Y or Z |

Finally, for both sides, we could further ensure that the two subclasses are disjoint, i.e., that nothing can be an instance of both Y and Z or of both Feline and Mouse:

DisjointClasses: Y Z DisjointClasses: Feline Mouse |

In summary, there are two additional readings of “is-a” edges between classes, and they may be both intended, or only one of them, or none of them. Faithfully capturing the intended meaning in their translation into OWL not only leads to a more precise model, but also to more entaillements.

Relationship links between concepts

Now let us consider relationship links, like to the ones in the following picture:

Understanding and capturing their meaning is a rather tricky task, and we start with the most straighforward ones.

First, we can read them as saying that every Mouse has some part that is a Tail, or that every instance of X is related, via P, to an instance of Y.

Mouse SubClassOf: hasPart some Tail X SubClassOf: p some Y |

Second and symmetrically, we can read them as saying that every Tail is a part of some Mouse, or that every instance of Y is related, via the inverse of P, to an instance of X.

Tail SubClassOf: Inverse(hasPart) some Mouse Y SubClassOf: Inverse(p) some X |

We may find the first reading more “natural” than the second, but this may be entirely due to the direction in which we have drawn these edges and the fact that we know that other animals have tails, too.

Thirdly, we can read these edges as restricting the range of the relationship p or hasPart; that is, they express that, whenever anything has an incoming p edge, they are a Y. Of course, we know that it makes no sense to say that whenever anything is a part of something else, then it is a +Tail, hence we only translate the former:

Thing SubClassOf: p only Y |

There are alternative forms of expressing the latter axiom in OWL, e.g.:

p Domain: Y (Inverse(p) some Thing) SubClassOf: Y |

We may find this translation odd, yet if we imagine we had drawn a similar picture with “Teacher teaches courses”, then we may find it less odd.

Fourthly and symmetrically, we can read this as restricting the domain of the property labelling the edges; that is, they say that anything that has an outgoing p edge is an X, or that anything that can possibly have a part is a Mouse. Again, the latter reading makes little sense (but makes some for our teacher/courses example), so we only translate the former:

Thing SubClassOf: Inverse(p) only X |

Again, there are alternative forms of expressing the latter axiom in OWL, e.g.:

p Domain: X (p some Thing) SubClassOf: X |

Finally, for reading 5 and 6, we can understand these links as restricting the number of incoming/outgoing edges to one: we could clearly read the right hand picture as saying that every Mouse has at most one Tail and/or that every Tail is part of at most one Mouse:

Mouse SubClassOf: (hasPart atmost 1 Tail) Tail SubClassOf: (Inverse(hasPart) atMost 1 Mouse) |

Interestingly, we can combine all 6 readings: for example, for our Mouse and Tail example, we could choose to apply reading 1, 5, and 6; if we rename the class Tail to MouseTail, we could, additionally, adopt reading 2.

If X is Teacher, p is teaches, and Y is Course, we could reasonably choose to adopt readings 1 – 4, and even possibly 6 depending on the kind of courses we model.

Summary

There are so many ways of interpreting pictures with nodes and arrows between them – knowing these possible interpretations should help us to make the right decisions when translating them into OWL. Pictures are useful for sketching, but have their ambiguities; OWL axioms have a precise semantics (10.1186/1471-2105-8-57) and asking the appropriate questions of your picture can help draw out what you really mean to say and what you need to say.

No Comments

Leave a comment

Login